🧠 The Architecture of Alpha: How Renaissance Technologies Engineered the Most Successful Investment System in History

The fund you’ve never heard of returned 66% a year for three decades—because it built an adaptive signal engine, not just a model

Inspired by Acquired’s podcast on Renaissance Technologies

🎧 Listen to the full episode on Spotify

If You Still Think Renaissance Technologies Won Because of a Magic Model, You’re Missing the Entire Point

Renaissance Technologies — and its flagship Medallion Fund — is often treated like a black box.

Its reported returns are the stuff of finance legend:

~66% annualized gross returns over three decades

~39% net of fees (after 5% management and 44% performance)

Sharpe ratios as high as 7.5

Zero down years from 1990 through 2022

But what made Renaissance work wasn’t alpha in the conventional sense.

It wasn’t a brilliant trade.

It wasn’t some hidden factor.

And it certainly wasn’t a static “model.”

What they built — and what the Acquired podcast made clear in their outstanding three-hour breakdown — was a real-time probabilistic system designed to ingest massive data, discover weak signals, execute with near-zero leakage, and rebuild itself faster than its edge decayed.

This wasn’t a quant strategy.

This was an architecture for alpha.

The Medallion Engine: A Probabilistic Learning Machine

Let’s make this tangible.

What did Renaissance actually build?

They built a self-regulating loop with six integrated layers:

1. Data Ingestion

Multi-asset tick data, macro signals, fundamentals, market depth

Timestamp-aligned, replayable, lossless

Structured for both historical backtesting and live signal scoring

2. Feature Engineering

Thousands of time-dependent features per instrument

Rolling returns, vol regimes, liquidity proxies, lead-lag effects

Dynamically refreshed — not static factor libraries

Cross-sectionally ranked, reweighted, and joined in-stream

3. Signal Detection

Probabilistic models (Markov chains, ensemble trees, online learners)

Signals often only 50.5–51.5% accurate — but acted on in size and volume

Continuous signal decay scoring

Kill thresholds tied to live Sharpe degradation

4. Execution Integration

Micro-order slicing, randomized routing

Market impact forecasting embedded in the model

Real-time fill feedback loops to rescore alpha post-trade

Execution treated not as plumbing — but as signal integrity maintenance

5. Governance

Real-time monitoring of drift, turnover, and PnL attribution

Risk controls modeled as state transitions, not static rules

Anomalies surfaced by the system, not detected by humans

“Meta-signals” governed the health of the signal system itself

6. Reinvention

The system was rebuilt — not tuned — every 1–2 years

Feature pipelines, model frameworks, execution logic all refreshed

System-level entropy prevented by culture + architecture

Bottom line:

Renaissance didn’t win by having better predictions.

They won because their entire machine was built to extend the half-life of edge.

The Core Principle: Alpha is a System Property, Not a Model Output

This is the key misconception in modern quant circles:

Most funds treat alpha as a thing you discover.

Renaissance treated alpha as a property of systems that can adapt faster than signal decay.

They didn’t search for magical signals.

They engineered a feedback loop to detect, exploit, and sunset edge at scale.

In this view:

Features are temporary

Models are scaffolding

Execution is signal preservation

Sharpe is the system’s vital sign

Reinvention is mandatory, not optional

Alpha wasn’t a prize.

It was the residual effect of good system hygiene.

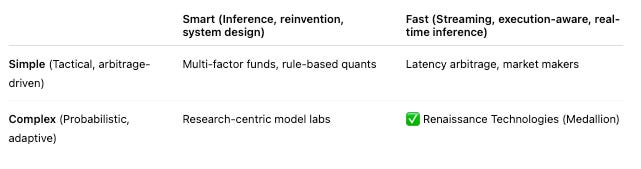

Speed and Intelligence: The Strategic 2x2

Brett Harrison, in the Acquired episode, outlined a strategic 2x2 for understanding quant firm positioning.

Let’s build on that:

Most shops occupy just one axis.

They are either smart but slow

Or fast but tactical

Renaissance operated in the rare top-right: Fast + Smart.

Streaming inference

Real-time risk telemetry

Signal decay tracking

Execution feedback loop

Full-stack system ownership

That quadrant is extremely hard to reach — and extremely defensible.

Sharpe Was Not a Goal — It Was a Constraint

If you want to know what Renaissance really optimized for, it wasn’t return.

It was Sharpe.

Sharpe ratio measures return per unit of volatility. But more than that, it reveals the efficiency of a system:

How consistent are your forecasts?

How much edge survives execution?

How well is your sizing calibrated?

How many signals degrade under stress?

In a system where most signals are only 50.5% accurate, Sharpe becomes the integrity check.

If a new feature improves returns but increases volatility — it’s a false edge.

Sharpe was the constraint that forced the system to:

Drop decaying signals

Reweight under stress

Adjust execution to preserve confidence

Refactor workflows, not just retrain models

Execution Was Part of the Model

This cannot be overstated.

Renaissance did not treat execution as post-processing.

Instead:

Models were scored on impact-adjusted alpha

Routing engines were adaptive to venue-level behavior

Orders were randomized to prevent signal leakage

Live fills were fed back into model scoring pipelines

Execution wasn’t just latency management.

It was preservation of probabilistic integrity under real-world constraints.

System Governance Was Continuous

In most funds:

A model underperforms

A human reviews it

Changes happen monthly or quarterly

At Renaissance:

Signal degradation was tracked live

PnL attribution was probabilistic

Models were automatically suspended when Sharpe fell

Stress scenarios were simulated in real-time

Meta-models governed model viability

This meta-governance layer is what allowed the system to remain coherent as it scaled.

It also ensured the system didn't blindly compound bad decisions — a problem that plagues many high-frequency or high-complexity pipelines today.

The Most Important Loop: Reinvention Before Decay

Every ~12–24 months, Renaissance rebuilt core components of their architecture.

Not just:

Feature sets

Models

Parameters

But also:

Code pipelines

Storage structures

Execution frameworks

Signal governance logic

This wasn’t overhead. This was strategy.

By the time decay became visible, the next version of the system was already deployed.

They didn’t optimize models.

They optimized how quickly the system could reinvent itself without loss of signal continuity.

What This Means Today

Most modern decision systems are still designed as:

Static inference layers

With batch pipelines

And disconnected execution logic

Running in separate governance tracks

But if alpha is decaying faster…

If signals emerge in hours, not quarters…

If execution friction destroys statistical edges at scale…

Then you need:

Streaming ingestion

Real-time feature scoring

Execution-aware modeling

Sharpe-governed telemetry

And architectural agility as a first-class concern

In short:

You don’t need better predictions.

You need a system that outlasts bad ones.

Final Thought

Renaissance Technologies didn’t win because it had better trades.

It won because it treated the entire system — from data to inference to execution — as a live signal-processing architecture.

Its real insight wasn’t in math.

It was in system design.

And the most important takeaway?

Alpha isn’t a feature of clever models.

It’s a byproduct of intelligent, adaptive systems.

That’s what made Medallion unbeatable.

And that’s the bar for anyone building intelligent infrastructure — in trading, AI, or real-time decision science.

🎧 Listen to the source of inspiration:

Acquired: Renaissance Technologies (Spotify)