The Intelligence Reset – Part 4: Decay-Aware Performance

Your Next Risk Indicator

“If accuracy is your north star, you’re navigating by dead light.”

In Part 3, we introduced two foundational metrics—Relevance Half-Life and Reflex Latency—to track how quickly knowledge fades and how fast a system reacts.

But now we confront the system-level question:

How do we measure whether an AI system is still performing—not on average, but right now—as the world shifts underneath it?

That brings us to one of the most critical performance frameworks in adaptive AI:

Decay-Aware Performance (DAP).

📉 Why Accuracy Doesn’t Tell You Enough

Accuracy is a holdover from a simpler time:

Train-test splits

Static distributions

Clean labels and fixed classes

But in real-world environments—markets, battlefields, production lines—data distributions shift constantly.

Signals drift. Latencies vary. Assumptions break silently.

By the time your model’s KPI falls below threshold, it’s too late.

You weren’t measuring the right thing.

🧠 What Is Decay-Aware Performance?

Decay-Aware Performance (DAP) measures how a model’s output quality evolves over time in relation to shifting context.

Instead of asking “How accurate is the model overall?”, DAP asks:

How does performance degrade across recent time windows?

Which inputs are losing relevance the fastest?

What contextual triggers (market, mission, mechanical) are behind the drift?

This is temporal performance analytics—tied to:

recency

context

responsiveness

🔍 How to Measure DAP

1. Segment Time Windows

Divide your model’s operational window into temporal slices:

Rolling 1-hour or 5-minute segments for real-time systems

Regime-aware windows for market shifts or battlefield phases

2. Track Metric Degradation

Within each window:

Measure KPIs like accuracy, F1, precision—but relative to when the model was trained

Visualize decay curves for each feature or submodel

3. Correlate Drift with Environmental Signals

Map DAP to external events:

Market volatility

Weather/sensor shifts

Campaign phase or terrain change

This is how you go from performance to diagnosis.

📊 What a DAP Dashboard Looks Like

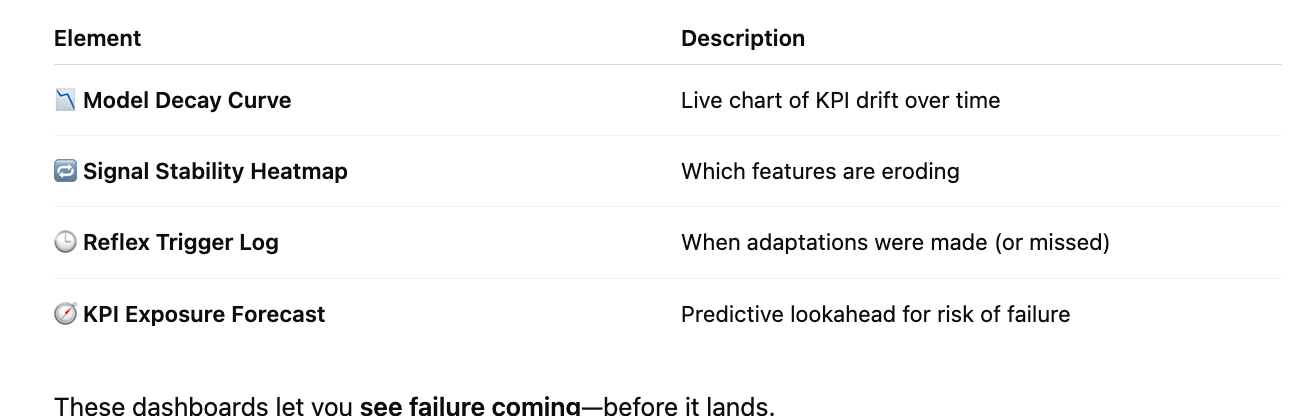

An effective decay-aware dashboard shows:

These dashboards let you see failure coming—before it lands.

🏭 Industry Examples

Finance:

Models show flat accuracy—but DAP reveals degradation during illiquid after-hours trading

Reflex latency flagged as the real cause of PnL drop—not the model architecture

Defense:

Mission-phase adaptation: sensor inputs degrade under night ops; DAP triggers fallback models

Weapon targeting logic swaps mid-mission as environmental drift exceeds safe tolerance

High-Tech Manufacturing:

QC models hold steady across batches—until new material lots shift pressure thresholds

DAP highlights early-stage drift before yield loss spikes

⚙️ From Monitoring to Mitigation

DAP isn’t just for display—it’s for action.

A mature decay-aware system automatically:

Flags relevance degradation before KPI collapse

Triggers contextual replay on decaying segments

Benchmarks alternate model versions in replay mode

Reflexively deploys the most stable variant

In short: DAP → Replay → Relearn → Reflex

💡 Core Insight

“Don’t ask how well your model performs—ask how fast that performance is fading.”

In adaptive systems, sustainability beats one-time wins.

If your model decays slowly and adapts early, you’ve built something robust.

Otherwise, it’s just a matter of time.

📌 Coming in Part 5:

How LLM agents use token half-life and reflexive planning

Why static reasoning chains fail in long-horizon tasks

Designing agents that think with time, not just in steps