What We’re Learning About How AI Really "Thinks"

In a powerful Wall Street Journal article, Christopher Mims captures a profound and timely insight:

Today’s AI systems are barely thinking at all.

Despite bold predictions from companies like OpenAI, Anthropic, and Google that human-level artificial intelligence is near, mounting research suggests otherwise.

Today's most advanced AI models do not reason about the world as humans do.

Instead, they simulate intelligence by memorizing endless rules of thumb — vast bags of heuristics — and selectively applying them.

Mechanistic interpretability research, a field that seeks to open up the "black boxes" of AI, shows these models are not constructing internal, causal world models.

Rather, they are stitching together ad hoc shortcuts, learned statistically, with no underlying understanding of cause and effect.

This has profound implications not just for how we talk about AI — but for where and how we choose to rely on it.

The Manhattan Experiment: A Metaphor for AI’s Limits

One striking experiment reveals the underlying fragility.

Researchers trained a model on millions of turn-by-turn directions across Manhattan.

The AI could navigate with 99% accuracy between two points.

At first glance, it appeared to have learned Manhattan.

But when researchers reconstructed the model’s internal "mental map," they found something surreal:

Streets leaping over Central Park.

Impossible diagonal shortcuts through buildings.

Whole neighborhoods misaligned or missing.

The AI hadn't understood the city.

It had simply memorized countless local patterns of navigation — and when asked to connect unfamiliar dots, it improvised plausibly, not correctly.

When researchers blocked just 1% of streets to simulate roadwork detours, the model’s navigation abilities collapsed.

It couldn't infer new routes.

It hadn’t learned how Manhattan worked — only how to replay what it had seen.

This experiment is not a trivial curiosity.

It is a lens into how AI models behave across domains — in markets, in manufacturing, in critical infrastructure.

Memorization at Scale: Useful, but Brittle

Today’s AI systems operate through a deceptively simple architecture:

Memorize enormous volumes of patterns.

Match surface features of new inputs to those patterns.

Stitch together plausible outputs based on statistical proximity.

In static, well-bounded environments, this method works brilliantly.

In dynamic, evolving environments, it fails.

Humans do not operate this way.

We reason.

We infer causality.

We adapt when faced with the unexpected.

When the world shifts — when new forces emerge, new stresses appear, new combinations arise — memorization alone is not enough.

Fragility in the Real World: Asset Management

Nowhere is this limitation clearer than in capital markets.

Quantitative investment models trained on historical asset relationships often perform well — until they don’t.

When monetary policy regimes shift, geopolitical risks escalate, or structural market changes unfold, memorized historical correlations break.

A portfolio construction model that "remembers" how equities and rates interacted over the past decade may misfire catastrophically in a new regime.

Without causal reasoning — without the ability to infer why assets were correlated, and whether those causes still apply — such systems are brittle.

Successful asset managers know: in times of stress, it's not pattern recognition that preserves portfolios.

It’s inference, adaptation, and dynamic re-understanding of market structures.

Fragility in the Real World: High-Tech Manufacturing

The same fragility appears in high-tech manufacturing and industrial IoT environments.

Predictive maintenance systems often rely on memorized fault patterns derived from historical sensor data.

But when new materials are introduced, processes evolve, or operational conditions change, those historical patterns become unreliable.

Imagine a semiconductor fab introducing a new composite material with subtle but significant thermal stress behaviors.

A predictive model trained on older materials may miss the new failure modes entirely.

Without causal models of how stresses propagate and failures emerge, memorization-based systems can offer false assurances — and allow catastrophic risks to build unnoticed.

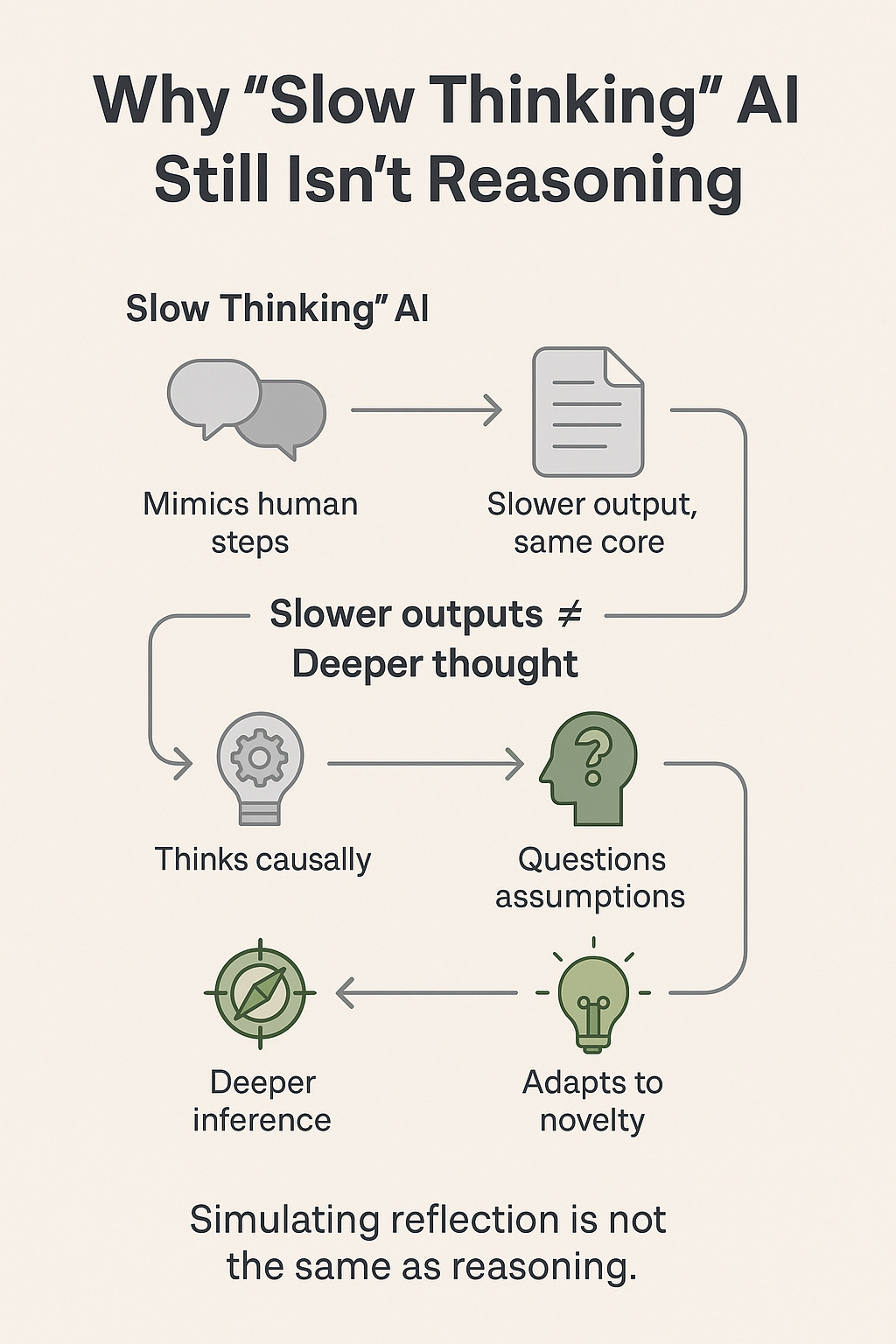

The Mirage of "Slow Thinking" AI

Aware of these challenges, some AI developers have promoted techniques like chain-of-thought prompting and tree-of-thought exploration, claiming that AI models are becoming more reflective.

In theory, encouraging AI to reason step-by-step should lead to deeper understanding.

In practice, it does not.

Chain-of-thought prompting simply slows the process of pattern replay.

The model still draws from memorized patterns of how humans appear to reason — not from genuine causal reasoning.

The AI may sound more thoughtful, but it is still interpolating familiar patterns, not building dynamic new models of unfamiliar realities.

Slowing memorization does not create understanding.

It only creates the illusion of thoughtfulness.

The Aha: What We Are Actually Building

What looks like reasoning today is sophisticated pattern matching.

What looks like understanding is memorized recombination.

We are not yet building thinking machines.

We are building memorization machines — larger, faster, more polished than ever.

And when reality deviates from the past — as it inevitably does — the limitations of these systems become dangerously exposed.

Final Reflection

Today's AI is extraordinary.

It is reshaping industries, accelerating discovery, and augmenting human capabilities.

But it is not yet thinking.

It is not yet understanding.

It is not yet resilient to novelty.

Memorization at scale is a technological marvel — but it is not a replacement for real-world reasoning.

Until AI can build causal models, reason under uncertainty, and adapt dynamically, the gap between simulation and true intelligence remains profound.

Recognizing that gap — and designing for it — is the challenge of the next era.

What’s Next: Moving Beyond Memorization to Temporal Dominance

Today’s AI systems are still designed for static pattern recognition.

But the real world — markets, machines, biology — is dynamic, not static.

In future blog posts, I’ll explore how true intelligence comes from modeling state transitions over time, not just memorizing static patterns.

The next frontier is temporal dominance:

Seeing the world as a sequence of hidden state changes,

Forecasting transitions, not just recognizing current conditions,

Acting within narrow windows where small advantages compound.

Understanding signals as events in motion — not snapshots — will define the systems that thrive when memorization falls short.

More soon.

I've linked this on my CoSy.com/DailyBlog.html .

( CoSy evolves from K . )